See Full Size

Tesla wants HBM4

Tesla specially developed HBM4 chips Dojo supercomputer plans to integrate. Dojo, as it is known, is the company’s “Full Self-Driving” (FSD) is a powerful artificial intelligence system designed to train neural networks. According to industry experts, Tesla aims to use these new memories not only in the Dojo, but also in its data centers and future autonomous vehicles.

Currently, Tesla’s Dojo system is used to train complex AI models that support FSD capabilities. uses older generation HBM2e chips. However, according to TrendForce’s report, Tesla wants to benefit from the performance increases offered by HBM4.

See Full Size

Another expected HBM4 innovation is an integrated logic die that acts as a controller under the memory stack. This controller can provide a new level of speed and power optimization, making it an ideal solution for AI data processing.

HBM market is growing, competition is intensifying

HBM chip market to 33 billion dollars by 2027 While Samsung and SK Hynix are fighting fiercely for leadership in this field. Besides Tesla Nvidia, Microsoft, Meta ve Google American technology giants such as are also evaluating these chips. However, it should be reminded that Samsung is behind the competition for now. Meanwhile, Micron is targeting similar dates and is already supplying to Nvidia. Apart from these three companies, there is no one producing bulky HBM memory.

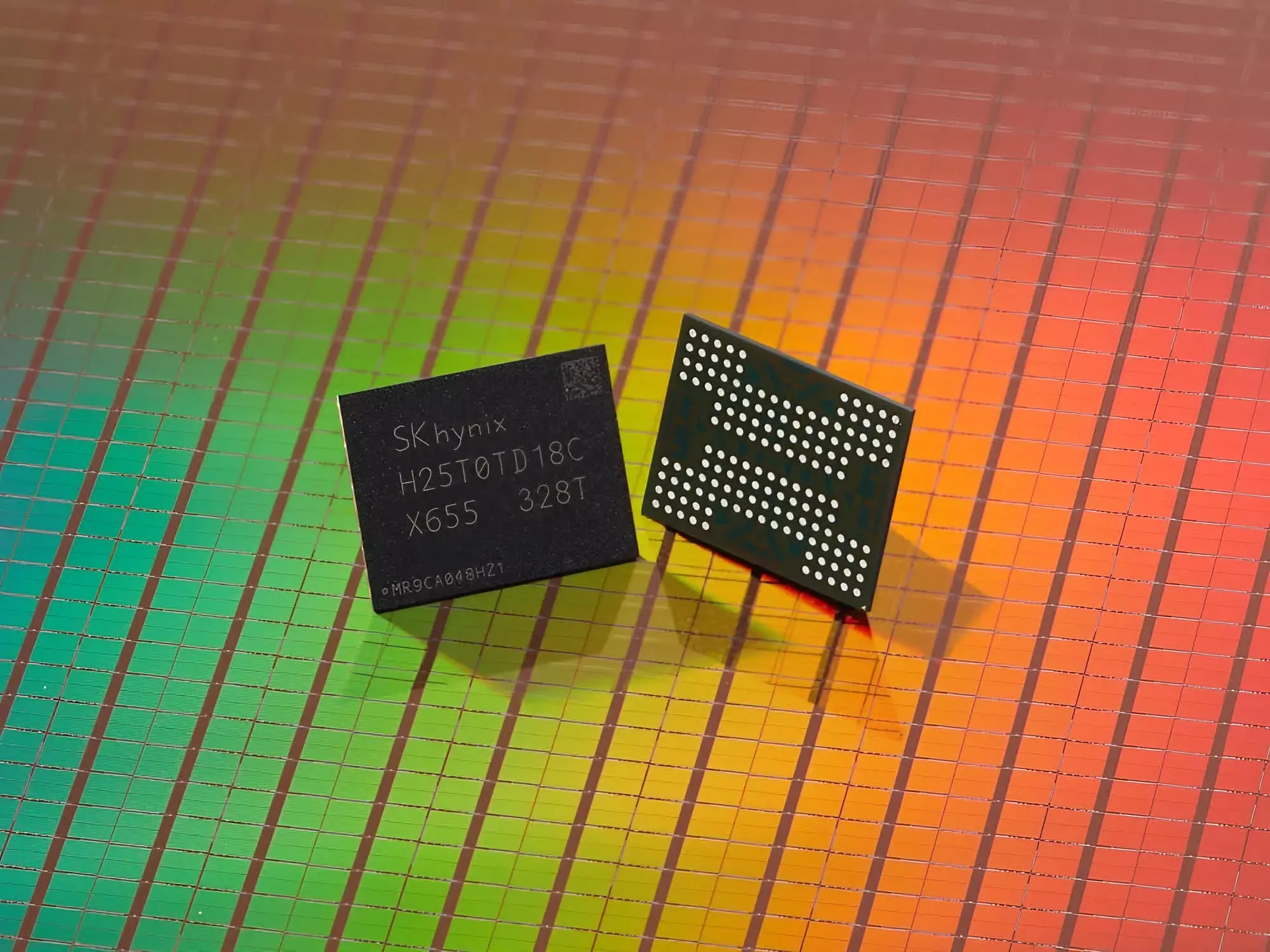

SK Hynix currently leads the way by supplying chips to Nvidia and aims to start HBM4 production in late 2025. The company also succeeded in being the first company to launch 321-layer TLC NAND flash memory. Not to be left behind, Samsung collaborates with Taiwan’s TSMC to produce key components using the advanced 4nm process node.

This news our mobile application Download using

You can read it whenever you want (even offline):