See Full Size

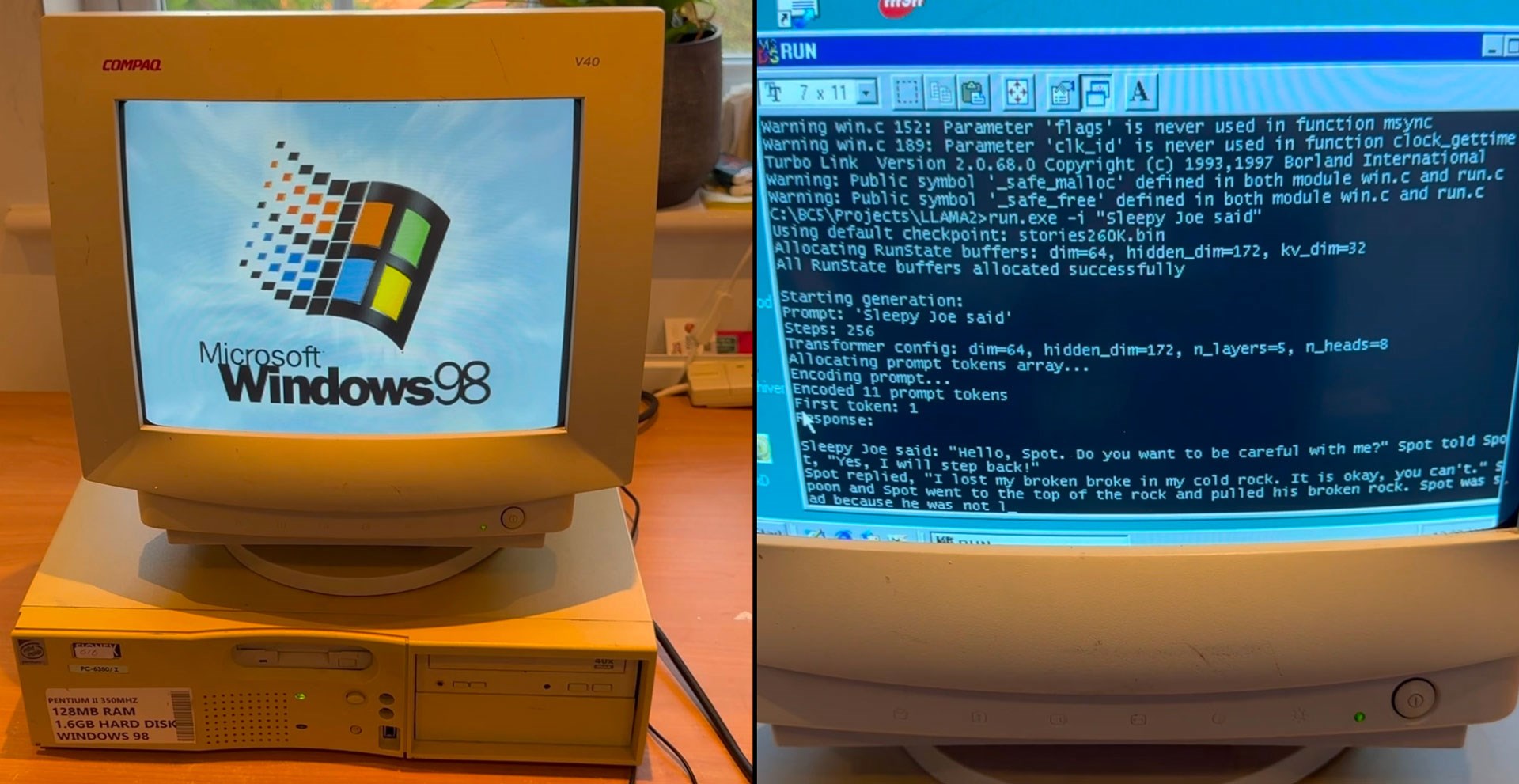

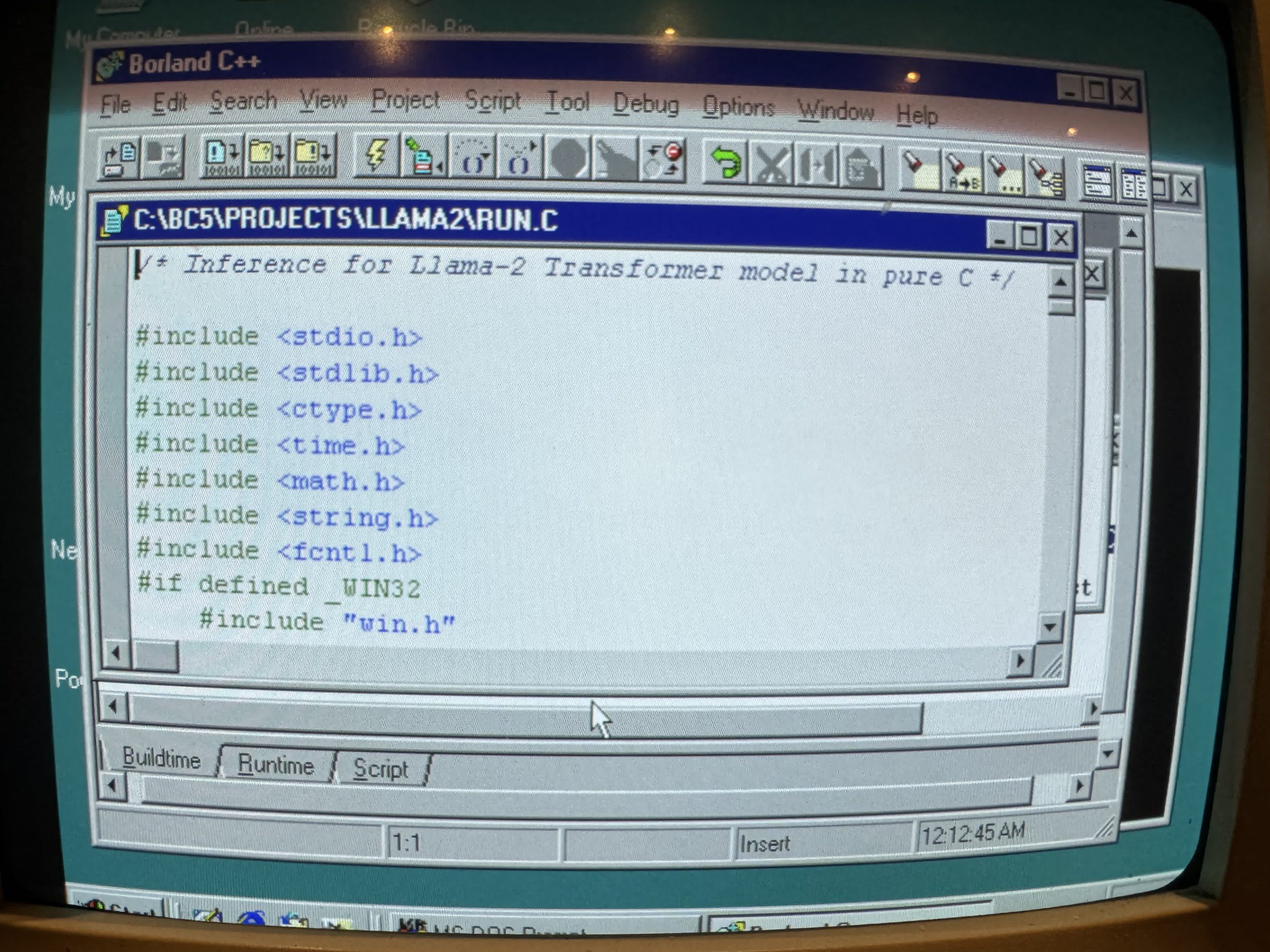

EXO Labs shared this experience with a video on the social media platform X. In the video, it is installed on a dusty Elonex Pentium II system. Llama2.c It was observed that a model based on the model successfully fulfilled the command to create a story. Moreover, it showed a satisfactory performance in terms of speed.

Success is big, goal is different

One of the biggest challenges the team faced was compiling and running a modern AI model on a 1998 operating system. However, overcoming these difficulties 260,000 parameters a LLaMA model 39.31 tokens per second They were able to run it at processing speed. There was a loss of performance in larger models; for example, a model with 1 billion parameters was only able to achieve a speed of 0.0093 tokens per second.

See Full Size

EXO Labs, “BitNetThey are working on a transformer architecture called “. This technology uses ternary weights to reduce the model size. and a model with 7 billion parameters in just 1.38GB of storage. makes it possible to operate. What’s even more impressive is that BitNet is designed to run on CPU only. This architecture can run a model with 100 billion parameters on a single CPU at a processing speed of 5-7 tokens per second.

This news our mobile application Download using

You can read it whenever you want (even offline):