See Full Size

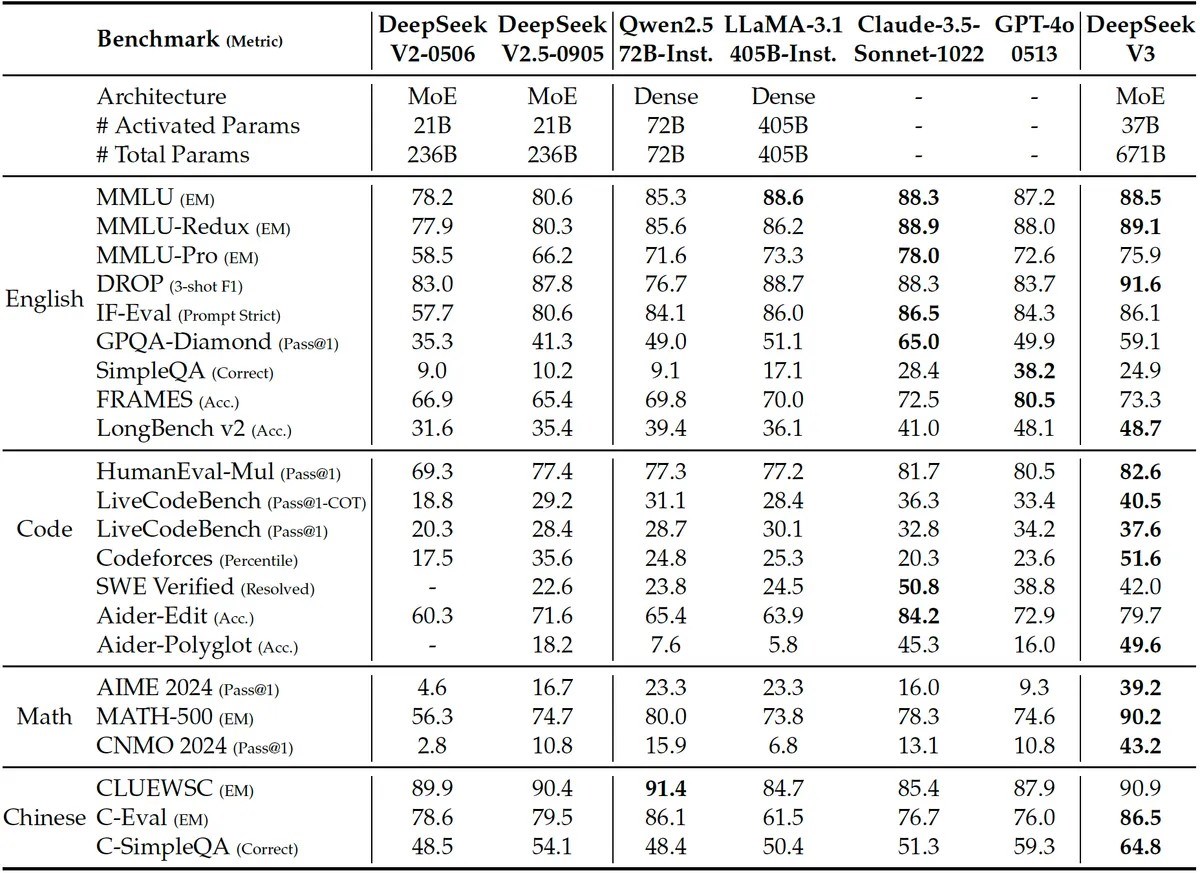

In the Deepseek article, DeepSeek-V3 developed the language model called Mixture-of-Experts (MoE) in just two months. 2.048 Nvidia H800 GPUUsing a set containing With 671 billion parameters trained, this 2.8 million GPU hours It means. By comparison, Meta’s 54 days along 16,384 H100 GPUs Using a set containing 405 billion parameters 11 times more processing power to train Llama 3 (30.8 million GPU hours) required.

Various optimizations have been made

DeepSeek uses advanced pipeline algorithms, an optimized communication framework, and FP8 low-precision computing to achieve the capabilities typically required for models at this scale. Significantly reduces computational and memory demands claims.

While DeepSeek implemented dozens of optimization techniques to reduce the processing requirements of its DeepSeek-v3, several key technologies enabled its impressive results.

DeepSeek is in the computation and communication phases DualPipe algorithm and therefore iReduces inefficiencies in the transmission line says. The DualPipe algorithm minimized training bottlenecks, especially for the inter-node expert parallelism required by the MoE architecture, and this optimization allowed the cluster to process 14.8 trillion tokens with near-zero communication overhead during pre-training.

In addition to implementing DualPipe, DeepSeek limited each token to a maximum of four nodes to limit the number of nodes involved in communication. This reduced traffic and enabled communication and computation to overlap effectively.

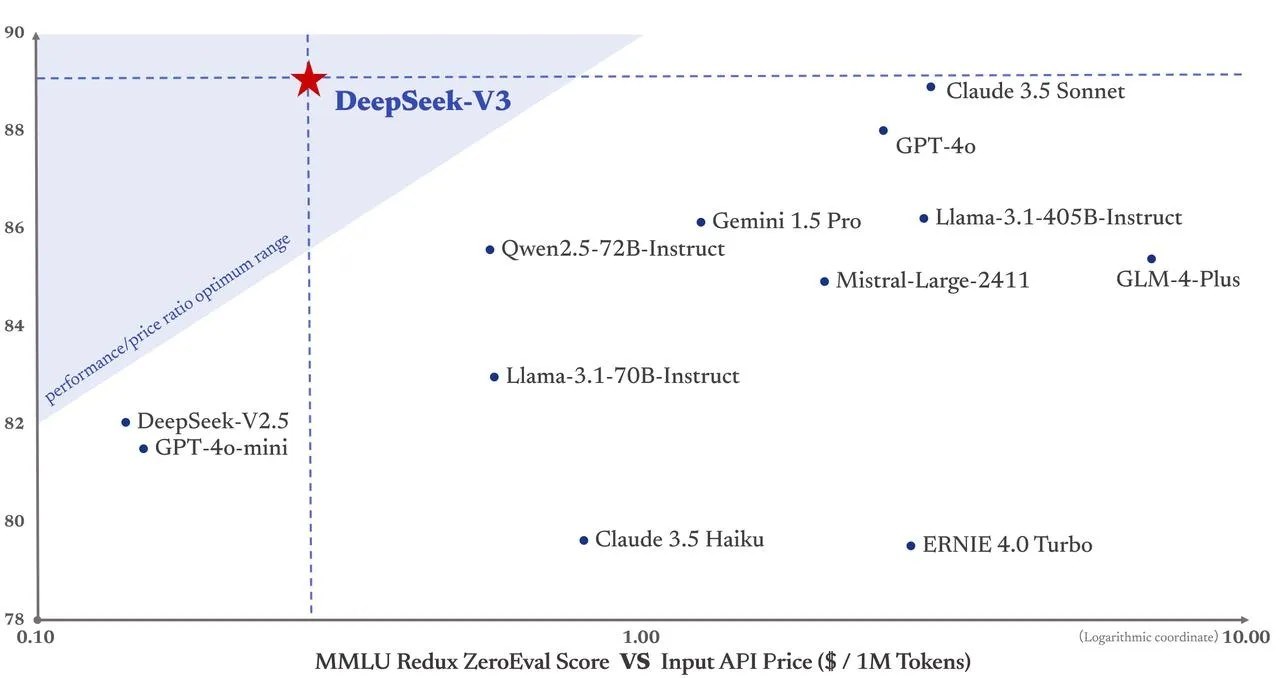

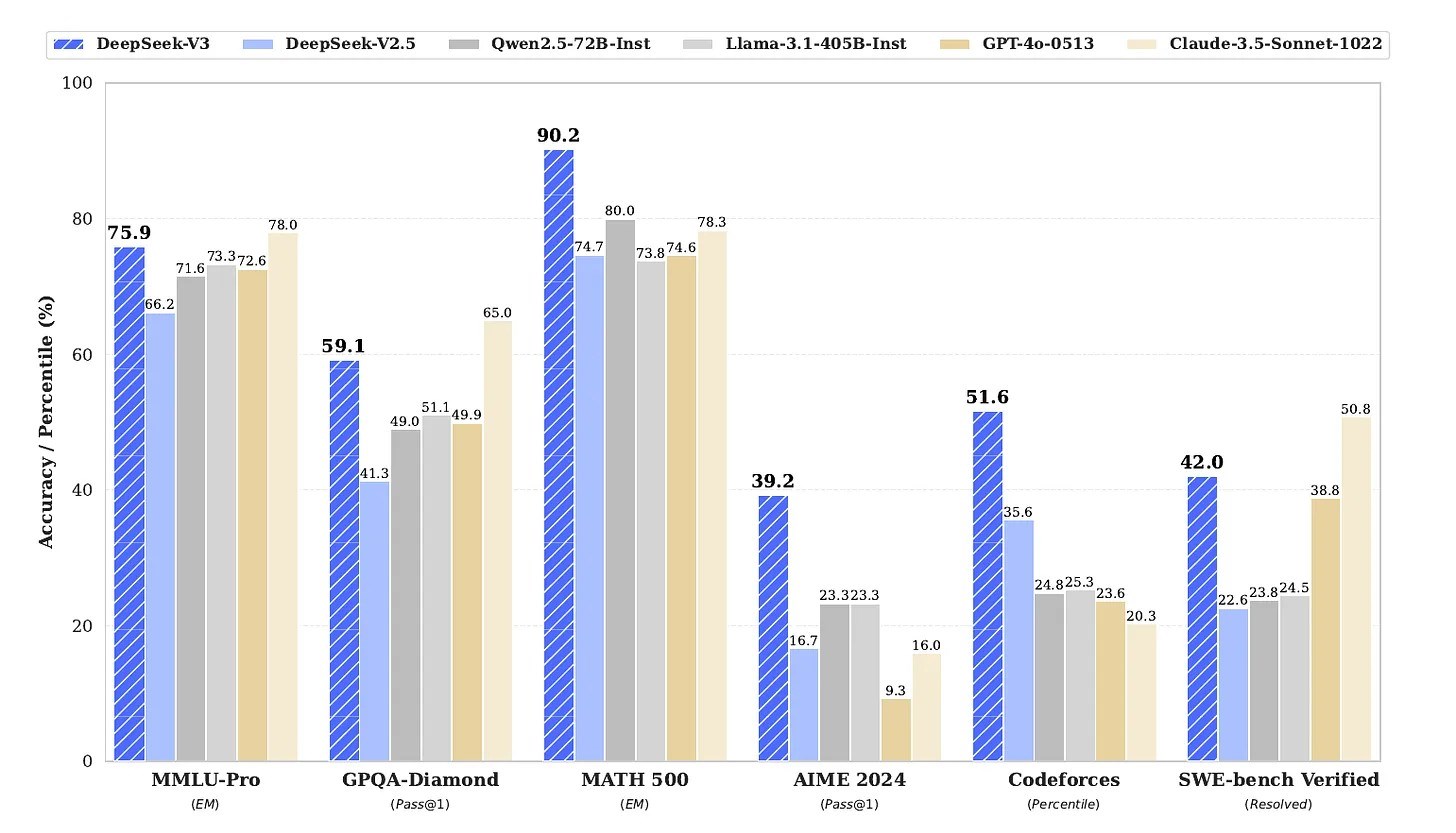

How does DeepSeek-v3 perform?

See Full Size

See Full Size

The DeepSeek team acknowledges that implementing the DeepSeek-V3 model requires advanced hardware as well as a deployment strategy that separates preloading and decoding phases, which may be inaccessible for smaller companies due to lack of resources.

Source

https://www.tomshardware.com/tech-industry/artificial-intelligence/chinese-ai-company-says-breakthroughs-enabled-creating-a-leading-edge-ai-model-with-11x-less-compute-deepseeks-optimizations-highlight-limits-of-us-sanctions

https://github.com/deepseek-ai/DeepSeek-V3/blob/main/DeepSeek_V3.pdf

This news our mobile application Download using

You can read it whenever you want (even offline):